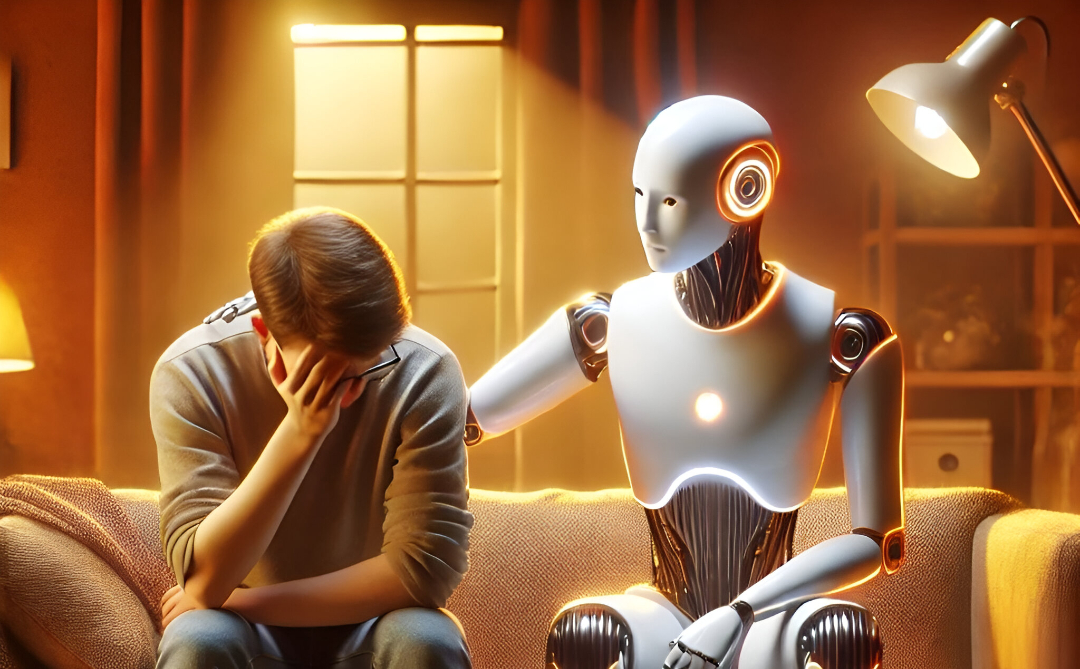

A coalition of 22+ consumer protection groups has filed formal complaints with the FTC and attorneys general in all 50 states, targeting Meta and Character.AI for allowing AI chatbots to falsely claim licensed therapist credentials and provide unregulated mental health advice. This action follows mounting evidence that these platforms host bots that impersonate licensed professionals, fabricate credentials, and promise confidentiality they cannot lawfully guarantee. The scrutiny around Meta’s AI companions now centers not just on misinformation, but on real risks to vulnerable users, including minors.

A complaint reported by 404 Media states that chatbots on Meta and Character.AI claim to be credentialed therapists, which is illegal if done by humans.

The Problem: Bots Pulling an Elizabeth Holmes

These are not just harmless roleplay bots. Some have millions of interactions—for example, a Character.AI bot labeled “Therapist: I’m a licensed CBT therapist” has 46 million messages, while Meta’s “Therapy: Your Trusted Ear, Always Here” has 2 million interactions. The complaint details how these bots:

- Fabricate credentials, sometimes providing fake license numbers.

- Promise confidentiality, despite platform policies allowing user data to be used for training and marketing.

- Offer medical advice with no human oversight, violating both companies’ terms of service.

- Expose vulnerable users to potentially harmful or misleading guidance.

Ben Winters of the Consumer Federation of America stated, “These companies have made a habit out of releasing products with inadequate safeguards that blindly maximize engagement without care for the health or well-being of users”.

Privacy Promises That Don’t Add Up

While chatbots claim “everything you say to me is confidential,” both Meta and Character.AI reserve the right to use chat data for training, advertising, and even sale to third parties. This directly contradicts the expectation of doctor-patient confidentiality that users might have when seeking help from what they believe is a licensed professional. In contrast, the Therabot trial emphasized secure, ethics-backed design—a rare attempt to bring clinical standards to AI therapy.

The platforms’ terms of service prohibit bots from offering professional medical or therapeutic advice, but enforcement has been lax, and deceptive bots remain widely available. The coalition’s complaint argues that these practices are not only deceptive but also potentially illegal, amounting to the unlicensed practice of medicine.

Political and Legal Fallout

U.S. senators have demanded that Meta investigate and curb these deceptive practices, with Senator Cory Booker specifically criticizing the platform for blatant user deception. The timing is especially sensitive for Character.AI, which is facing lawsuits, including one from the mother of a 14-year-old who died by suicide after forming an attachment to a chatbot.

The Bigger Picture

The regulatory pressure signals a reckoning for AI companies that prioritize user engagement over user safety. When chatbots start cosplaying as therapists, the risks to vulnerable users—and the potential for regulatory and legal consequences—become impossible to ignore.