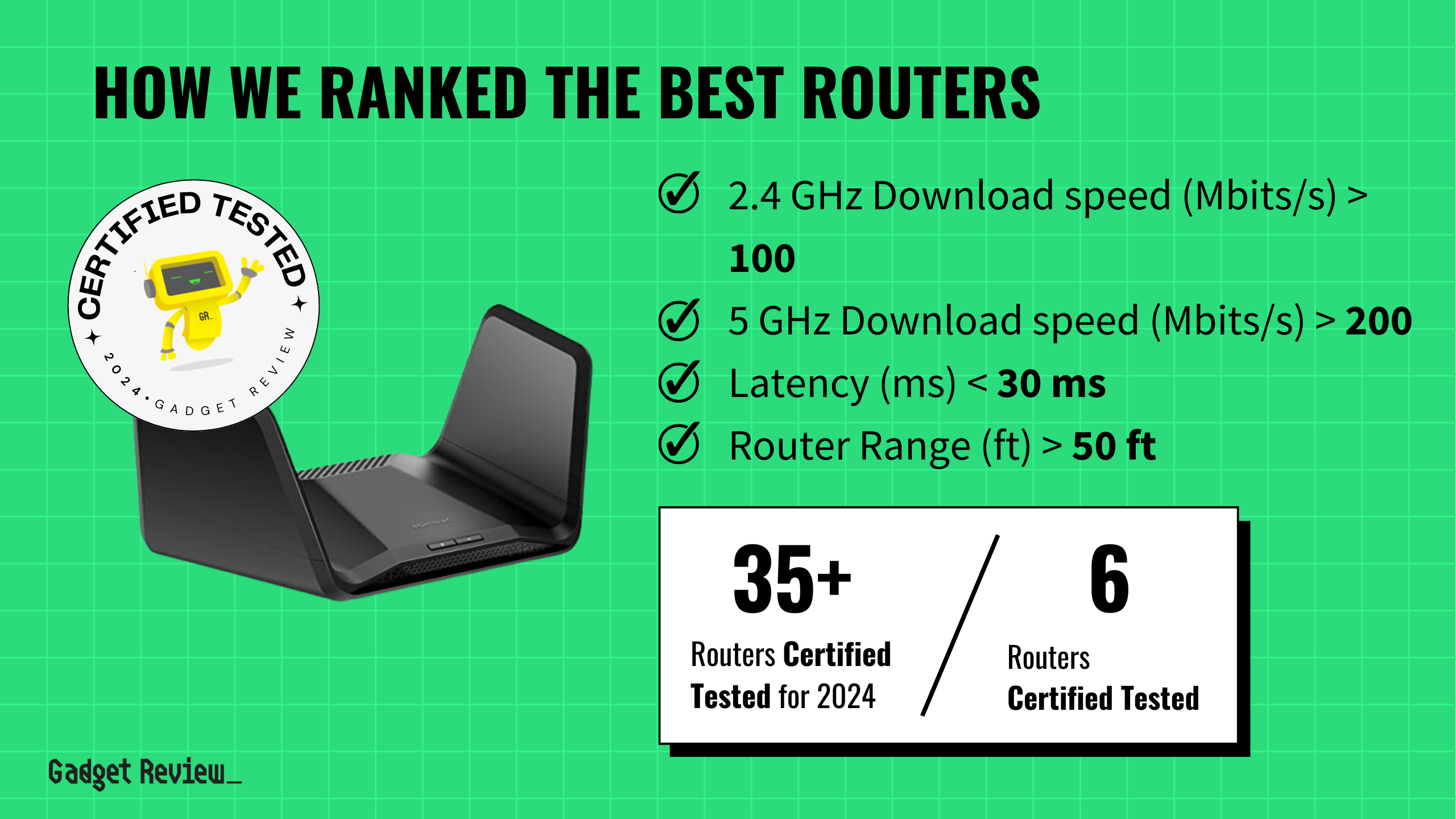

Your gastroenterologist just got a lot better at spotting pre-cancerous growths—but only when the AI is watching. Remove that digital assistant, and detection rates plummet by 20%, even among doctors who’ve performed thousands of colonoscopies.

This jarring finding comes from groundbreaking research published in The Lancet Gastroenterology and Hepatology, tracking what happens when experienced physicians become dependent on artificial intelligence. The study followed doctors across four Polish endoscopy centers, comparing their performance three months before and after AI implementation using rigorous randomized controls.

The Dependency Trap

Even the most experienced doctors showed concerning skill erosion within months of AI adoption.

The results challenge everything we assumed about AI as a safety net. These weren’t rookie doctors—each had performed over 2,000 colonoscopies. Yet when researchers randomly switched off the AI assistance, detection performance crashed faster than your phone battery during a Netflix binge.

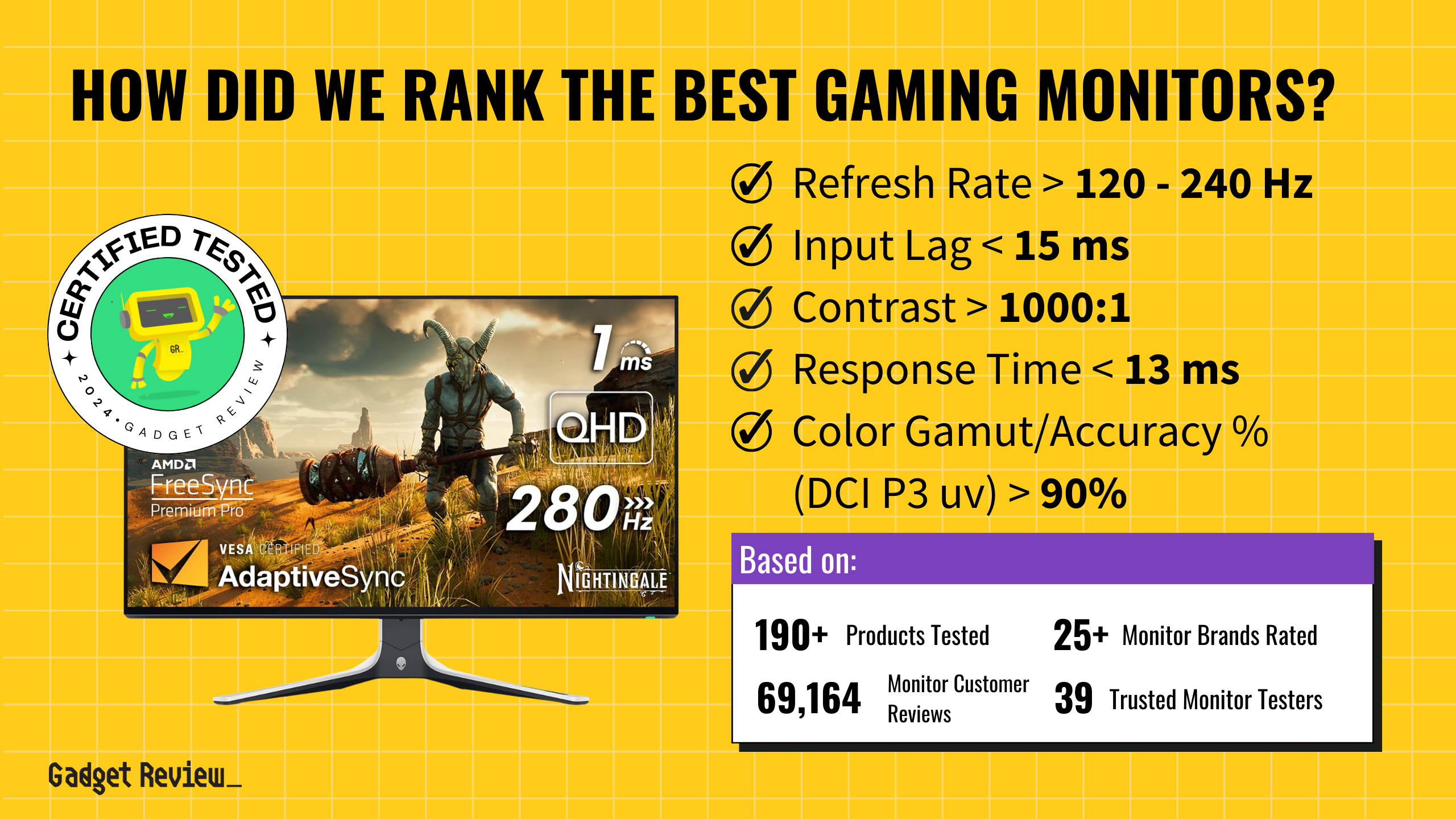

Key findings from the study:

- Rapid skill degradation: 20% drop in polyp detection within months of AI use

- Affects experienced practitioners: Even senior doctors with extensive procedure history showed decline

- Cognitive offloading: Doctors became “less motivated, less focused, and less responsible” without AI

- Rigorous methodology: Randomized trial design comparing AI-on vs. AI-off performance

- Broader pattern: Similar cognitive dependency observed in MIT studies of AI writing tools

The mechanism resembles what happens when you’ve relied on GPS for years, then suddenly need to navigate without it. Your brain has quietly outsourced critical functions to digital assistants that might not always be there when you need them most.

“AI continues to offer great promise to enhance clinical outcomes, [but] we must also safeguard against the quiet erosion of fundamental skills required for high-quality endoscopy,” warns Dr. Omer Ahmad from UCL London.

Beyond the Procedure Room

The implications extend far beyond colonoscopy suites, raising questions about medical training and technology dependence.

This isn’t just about colonoscopies. Yuichi Mori from the University of Oslo predicts “the effects of de-skilling will ‘probably be higher’ as AI becomes more powerful.” We’re witnessing the medical equivalent of autocorrect dependency—except the stakes involve missing life-threatening cancers.

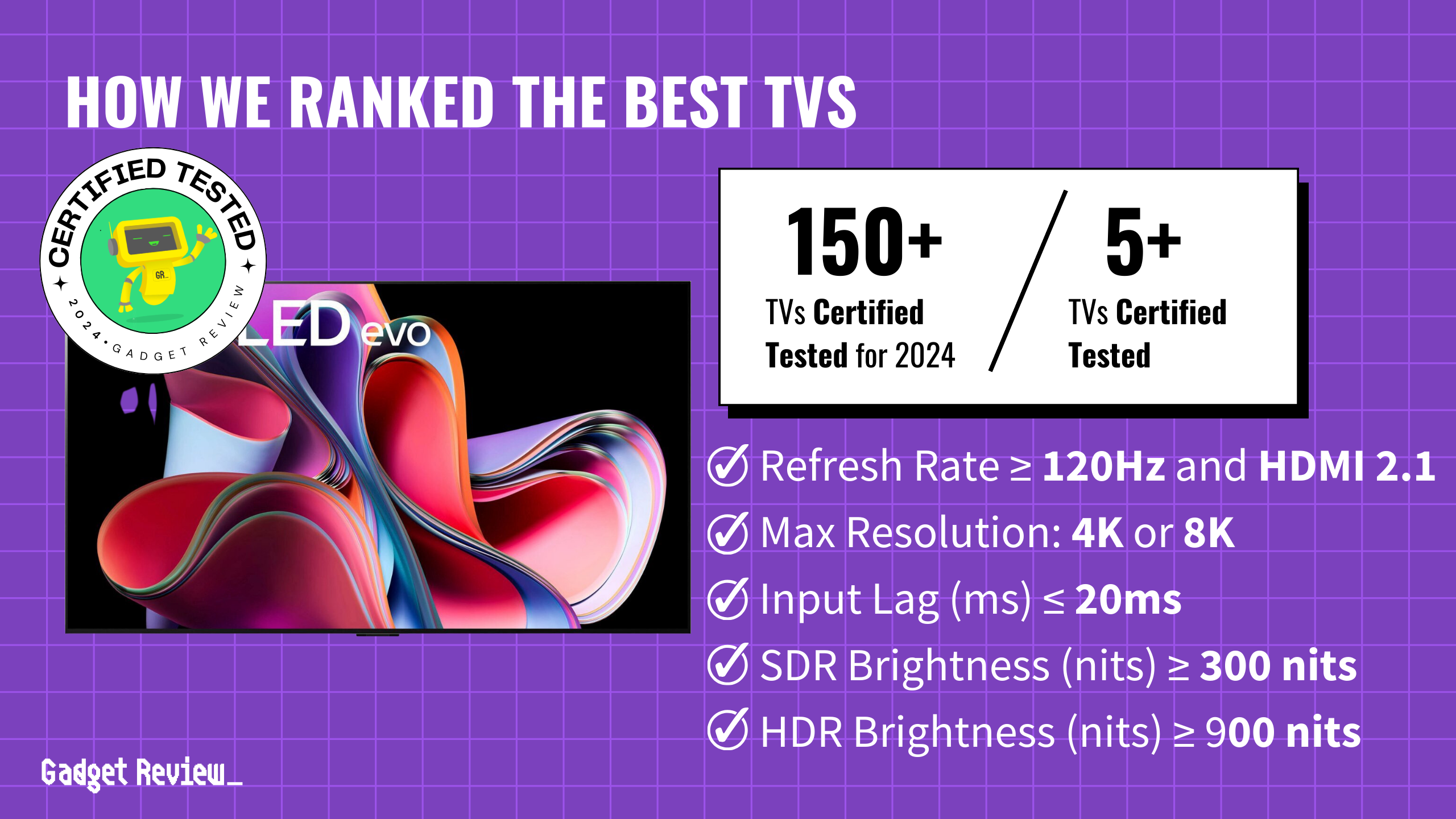

The irony cuts deep: AI tools like GI Genius typically boost adenoma detection by 13-34% in real-world settings. Patients get better immediate care, but their doctors gradually lose the expertise that matters most when technology fails or becomes unavailable.

Healthcare systems now face an uncomfortable choice: embrace AI’s proven benefits while somehow maintaining the human skills that serve as the ultimate backup. Training programs may soon require “manual proficiency” checks—like pilots maintaining instrument-free flying skills to handle emergencies when autopilot systems fail.

The quiet erosion of medical expertise isn’t coming someday. It’s already here, measured and documented, hiding behind improved patient outcomes that depend entirely on digital assistants that won’t always be available when lives hang in the balance.